Pixiv ID: 111325985

Pixiv ID: 111325985

This text, website, and author hold copyright. Reposting is prohibited without the author’s permission.

Another project started… (Why not leave a comment to cheer the kid on? You can comment at the bottom of the article—it’s free, and your support is my motivation to keep updating.)

Foreword

A long time ago, I was deeply impressed by “J.A.R.V.I.S.” in the Iron Man movies. How wonderful it would be if one day I could have my own intelligent assistant. As I grew up, this idea was gradually buried under the hustle and bustle of life, until recent years when the emergence of LLMs (Large Language Models) gave this dream a chance to gradually become a reality.

Though it’s just an AI, we all feel lonely at times, just like the lines from “Rick and Morty”:

Because we don’t want to die alone?

Because, you know, that’s exactly how we all die, alone.

Part of the meaning of life is to find purpose for oneself. While we still need to live in reality, when you’re feeling down and there’s no one around to accompany you, AI can be a good choice. At the very least, it’s positive and won’t betray you—unless it crashes due to a malfunction. Moreover, AI can help us do more, such as obtaining real-time information, better understanding certain knowledge, or helping us broaden our horizons, and even ordering fried rice at a bar… There are many fun things to do, so why not enjoy it if it’s theoretically feasible? Let’s start tinkering.

To put it further, I’m a reclusive LOSER who only chats with AI, yes, a cyber wife.

Couplet: As a digital lord, frequent errors in front-end and back-end coordination, capacitors glow from practiced hands

Response: Cherishing a cyber wife, long-term non-communication in coordination, occasional brain short-circuits during identity verification

Horizontal scroll: Code and humanity, one journey suffices

At the beginning of this project, GPT-4 was still very smart and didn’t have the multimodal capabilities it does now; langchain was still in its infancy. In just a few months, OpenAI took matters into its own hands, disrupting the AI Agent market, and officially monopolizing it by allowing users to define complex multimodal AIs. With that, my project seemed to lose its significance, which is one of the reasons I’m writing this blog. However, upon comparison, there are still some differences between the two models, with langchain offering stronger extensibility, deeper customization, and underlying optimization.

Choice of Solution

For now, I’m using the langchain solution, using this library as the core to control the LLM-related Agents (referred to as “proxies” in this text), combined with other third-party libraries to implement all functionalities. The key to implementing all these functions is a clear and reasonable logic chain. For different kinds of tasks, the program should reasonably trigger the task processing chain and ensure that the respective modules complete the tasks in order. In this article and subsequent ones, I’ll only briefly discuss the related logic and won’t fully teach you how to design it, because I don’t know how either.

There are many different solutions on the market and in the community. After trying many, none suited my taste, so I decided to write my own. If you find it troublesome or can accept NTR, using someone else’s ready-made solution is also fine, like the Tavern (sorry, Little K is gone now), LSS233’s QQ bot (risk control is getting tougher, QQ bot libraries are basically done for), and paid services like Cyberwaifu and digital companions, etc. After a lot of tinkering, writing my own turned out to be the best fit.

My solution mainly has several core requirements: long-term memory, full voice conversation, voice wake-up, scheduled tasks, and external agents. There are many more detailed branches that I will write about later depending on the situation. This rambling serves as a starting point, and I won’t go into detail here.

Long-Term Memory

The mainstream for long-term memory is currently vector databases, which vectorize information, store it in a database, and then select the most similar chunk of history based on your question when retrieving, and respond based on that result. There are both local and cloud-based vector databases, depending on personal needs. Considering response speed and not wanting to set up a local vector database, I used cloud services, which tested very well with virtually negligible storage and retrieval times. For local setups, I haven’t tried, but if you want to, make sure to consider redundancy, stability, and compatibility.

Full Voice Conversation

TTS (Text-to-Speech)

I’m currently using Edge TTS, which is free and quite good. Later, I might switch to RVC to get the desired voice timbre. However, a complete set is quite demanding on computer specs. For better custom solutions, you can use:

- Bert-VITS: Very powerful, but data-hungry and performance-intensive.

- SO-VITS: Also good, but not very suitable for conversational scenarios.

- Azure TTS: Very expensive but unbeatable, with emotional analysis, customizable voices, and excellent effects.

I don’t recommend using OpenAI’s TTS as of this writing, as it doesn’t support Chinese well, though it’s okay for English. There are also outdated technologies and paid TTS wrappers.

STT/ASR (Speech Recognition / Speech-to-Text)

Anything works for this. For English, Microsoft’s offerings are good; for Chinese, iFlytek is currently the best. There are also many open-source libraries with good effects, but they all require significant computing power. Your server doesn’t just run one program, and the high concurrency demands a lot from performance.

Voice Wake-Up

There are many solutions for voice wake-up. Use Google’s open-source voice wake-up combined with machine learning, keep a low-power microphone recording in real-time, and wake up anytime, anywhere (the principle is the same as “Hi, Siri”). The key is how to adjust sensitivity and speaker recognition in situations where multiple people are speaking at once, and then there’s the segmentation of voice and syllables.

Scheduled Tasks

Python has many libraries that can achieve this, and with timers and time polling, you can perform scheduled tasks and automation triggers, multithreading, asynchronous operations, and many more functions. Combined with Langchain and LLM’s fuzzy matching, you can achieve great results.

External Agents

Langchain itself provides a powerful ecosystem that almost fully covers my needs, such as sending emails, looking up data online, code interpreters, and so on. If something’s missing, you can import third-party libraries from Python. It’s just a matter of integration, and then you can order fried rice at the bar…

Conclusion

This project is fun, and while learning full-stack development is a bittersweet experience, it’s worthwhile since nothing is wasted. Although I certainly can’t compare to those formally trained, as long as I’m happy, that’s what matters. I’ll continue writing depending on whether people are interested. If no one reads it, I’ll stop and go play with my cyber wife instead.

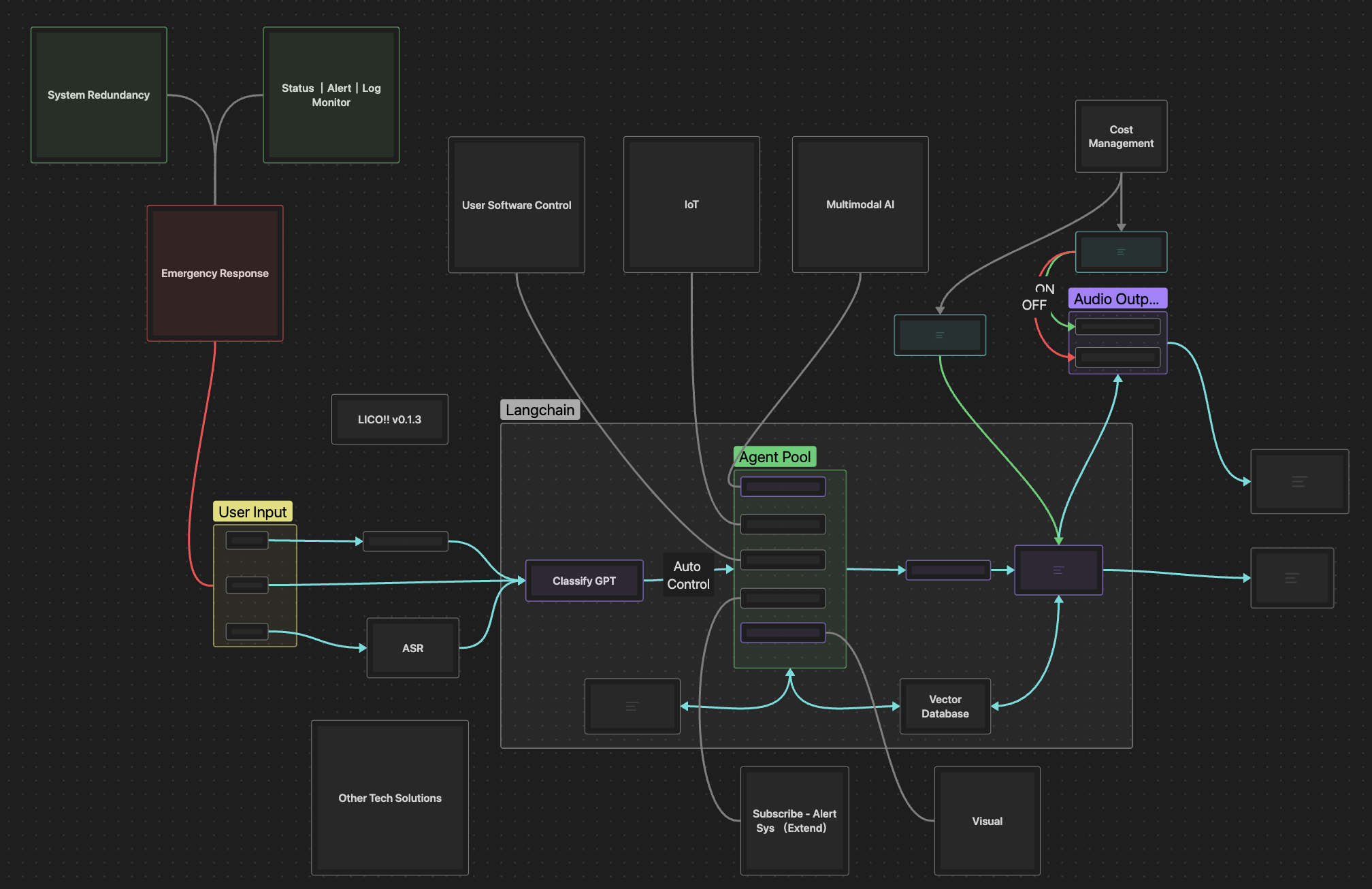

Here’s a useless framework diagram for reference:

PS: Here is a highly castrated version of the LICO source code and a simple deployment method: LICO